Exploring Computational Creativity with Neural Networks

The FloydHub #humansofml interview with Kalai Ramea - a researcher at Palo Alto Research Center where she works on fundamental machine learning research involving computer vision, NLP, and big data analytics.

Kalai Ramea is a researcher at Palo Alto Research Center (previously known as Xerox PARC) where she works on fundamental machine learning research involving computer vision, NLP, and big data analytics. Before joining the team at PARC, Kalai received her Ph.D. from University of California, Davis, where she focused on developing quantitative models in the domains of energy, transportation and climate.

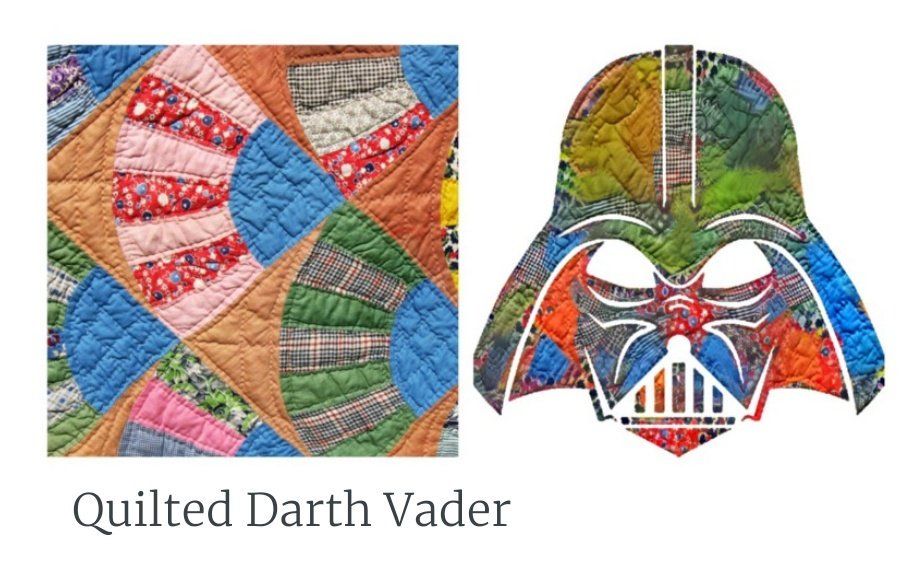

Quilted Darth Vader, by Xerox PARC researcher and https://t.co/ktYtgBpxGr alum @KalaiRamea Nice interview about her work with computational creativity:https://t.co/Wh3DOGN6ST pic.twitter.com/2Yv932saHA

— Rachel Thomas (@math_rachel) July 30, 2018

Outside of work, Kalai explores “computational creativity.” Recently, she’s been dabbling in deep learning, particularly its application in art. In 2017, she presented her project ‘Intricate Art Generation’ at the Self-Organizing Conference on Machine Learning.

After I discovered the quilted Darth Vader generated by Kalai’s project, I was hooked -- finally, the perfect gift for that newborn Sith Lord in your Instagram feed. In this #humansofml interview, Kalai and I chat about her Intricate Art project and her explorations in the brave new art world of deep learning.

This is the first time I’ve heard the phrase “computational creativity.” I love the term. What does it mean to you?

Yes, I love it too! The field of “computational creativity” covers many things—ranging from using computers to create art to using algorithms to understand human creativity. I am specifically interested in using AI as a tool to generate art. To clarify, this doesn’t mean artists will be “automated” by bots. We still need people to bring their passion and creativity to generate art pieces.

For example, take this analogy: in those days, painters had to mix their own colors from different sources; now, there are ready-made paint mixes available in tubes. I believe AI could act as another medium for people to express themselves.

Let’s talk about zentangles. What are they and how did you first encounter them?

I first saw my friend (who is very artistic) do it as a “wind down” activity (note the ‘zen’ in zentangles). These are typically a set of simple doodles drawn within a rectangle or other shapes. A collection of simple patterns can lead to intricate visuals at the end.

Have you ever drawn a zentangle from scratch?

Yes. However, I realized very soon that I don’t have the patience to do it.

So, how does your “automated zentangle” project work?

I was reading about the Neural Style Transfer algorithm at that time, so I wanted to test if a deep learning algorithm can take in some of these simple doodles and create its own complex patterns. I know, I know it defeats the purpose of ‘zen’tangles. But, believe me, for some people, coding how to produce zentangles is more relaxing than drawing them!

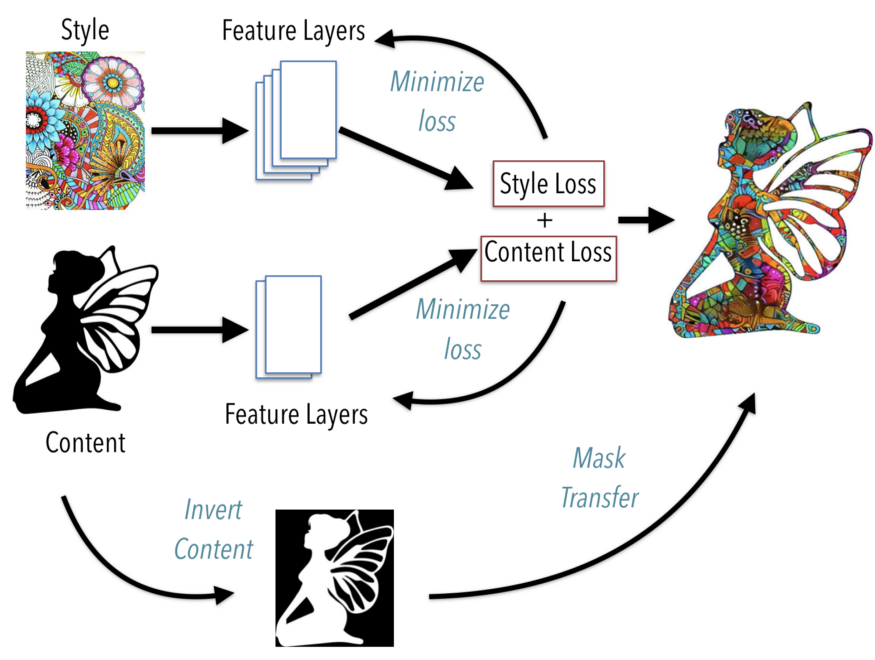

This involves a content image and a style image. Here, the content image is a silhouette, and style image could be a doodle or a set of doodles. Using pretrained weights from an existing neural architecture (in this case, VGGNET), information from different layers are reconstructed for style and content respectively. A loss function (Gram Matrix) is used to measure style loss and content loss, and this combined loss is minimized in each iteration. Thus, the output image will have a pattern resembling the ‘style’ image but modified to fit the content shape. I also used a mask transfer at the end to produce a clean look of the patterns (this filters out the noise outside of silhouettes).

Now, let’s try the same question, but as a Reddit-style “Explain Like I’m Five.”

Break the simple doodle into different parts and try to fit that into a shape. Now imagine an algorithm doing that for you.

Nice work! Your observation that using silhouettes improve your model’s results is quite interesting. Why do they help, and how did you figure that out?

I thought about how zentangles are created by hand. Typically, we draw the outline first, then start filling the shape with small doodles. I first tested the algorithm with a black outline and a white fill. But it did not work well as the solid white fill was dominating the generated pattern. The RGB value of white is (255, 255, 255). When I thought about how this mathematically works within a deep learning algorithm context, it dawned on me that the white fill was acting like a ‘repellent.’ I then decided to do the opposite—to use a color that can act like a ‘sink’--which is black (its RGB value is 0,0,0). And voila! The output patterns fit like a glove within the shape.

As you know, I really like your Quilted Darth Vader. What’s your favorite automated zentangle that you generated?

I really like the ‘stained glass’ pattern. This is something I did not expect to happen when I gave a geometric style as input. I like how the algorithm fit the right pieces in the silhouette.

When can I buy a deep learning coloring book from you?

Haha! I am not interested in creating a coloring book. However, I think anyone can use my code to create their own coloring books!

That's great - I've been looking for a good side hustle idea! Is your project publicly available for people to try out with their own patterns?

Yes - the code is open source and available on GitHub. You can even easily reproduce the project on FloydHub by clicking the button in my GitHub repo's README or in this post.

I’m eager to see what people create next with this model!

I’d love to hear about your experience at Self-Organizing Conference on Machine Learning. Are there any other conferences on your horizon?

SOCML is an ‘unconference’—so by definition, there was no agenda or speakers. Participants decided the discussion topics at the conference, and there were many break-out sessions. This is the second year this event is held. I was a bit suspicious on how it’s going to turn out, but, I would say, this was one of the best events I have attended. Since the number of attendees was limited, we had a lot of time and opportunity to interact which is usually not possible in larger conferences. I am hoping to attend SOCML this year too—this time, it is happening in Toronto, Canada. I will also be going to MLConf in November (San Francisco) and NIPS in December (Montreal).

Before we go, can you tell me a little bit more about what it’s like to work Palo Alto Research Center? I’ve obviously read the Steve Jobs biography, so I know that Xerox PARC is where much (if not all) of the inspiration for the GUI and the mouse comes from. Can you give us a glimpse of what’s on the horizon for personal computing? Are you typing these answers via hands-free mind-control or something like that? Blink twice if yes.

*Keeping my eyes wide open* Haha!

I love working at PARC! One of the best things here is the flexibility to work on different kinds of projects, and of course, the opportunity to interact with the amazing researchers. Currently, I am working on developing an intelligent writing assistant system. There is also some interesting work going on in the augmented reality area. Some researchers are working on developing an explainable AI system.

The field of deep learning and AI is constantly moving forward -- what are you hoping to learn or tackle next?

I consider myself as a machine learning generalist—I work on both NLP and computer vision at the moment. I am extremely interested in the application of these techniques in science and social science areas (e.g. climate science, public policy, energy, etc.). I believe there are several low-hanging fruit problems as we work on transfer learning in these fields. And in many cases, depending on the dataset, we might push the envelope to develop new techniques.

Any advice for people who want to get started with deep learning?

Don’t be intimidated! There are several resources nowadays to get started with deep learning. I highly recommend fast.ai (http://www.fast.ai/) deep learning courses by Jeremy Howard and Rachel Thomas. In fact, I learned neural style transfer through their videos before I started experimenting with them.

What’s next for you?

I want to continue my work in the scientist-artist continuum! I am currently learning how to use pre-trained models for natural language processing. As for my artistic side, my dream project is to combine computer vision and NLP to generate visual notes from the raw text!

Where can people go to learn more about you and your work?

Follow me on Twitter: @KalaiRamea. My personal website is https://www.kalairamea.com/.