Practical Guide to Hyperparameters Optimization for Deep Learning Models

Learn techniques for identifying the best hyperparameters for your deep learning projects, including code samples that you can use to get started on FloydHub.

Are you tired of babysitting your DL models? If so, you're in the right place. In this post, we discuss motivations and strategies behind effectively searching for the best set of hyperparameters for any deep learning model. We'll demonstrate how this can be done on FloydHub, as well as which direction the research is moving. When you're done reading this post, you'll have added some powerful new tools to your data science tool-belt – making the process of finding the best configuration for your deep learning task as automatic as possible.

Unlike machine learning models, deep learning models are literally full of hyperparameters. Would you like some evidence? Just take a look at the Transformer base v1 hyperparameters definition.

I rest my case.

Of course, not all of these variables contribute in the same way to the model's learning process, but, given this additional complexity, it's clear that finding the best configuration for these variables in such a high dimensional space is not a trivial challenge.

Luckily, we have different strategies and tools for tackling the searching problem. Let's dive in!

Our Goal

How?

We want to find the best configuration of hyperparameters which will give us the best score on the metric we care about on the validation / test set.

Why?

Every scientist and researcher wants the best model for the task given the available resources: 💻, 💰 and ⏳ (aka compute, money, and time). Effective hyperparameter search is the missing piece of the puzzle that will help us move towards this goal.

When?

- It's quite common among researchers and hobbyists to try one of these searching strategies during the last steps of development. This helps provide possible improvements from the best model obtained already after several hours of work.

- Hyperparameter search is also common as a stage or component in a semi/fully automatic deep learning pipeline. This is, obviously, more common among data science teams at companies.

Wait, but what exactly are hyperparameters?

Let's start with the simplest possible definition,

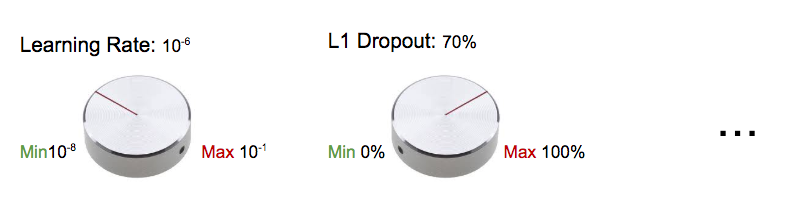

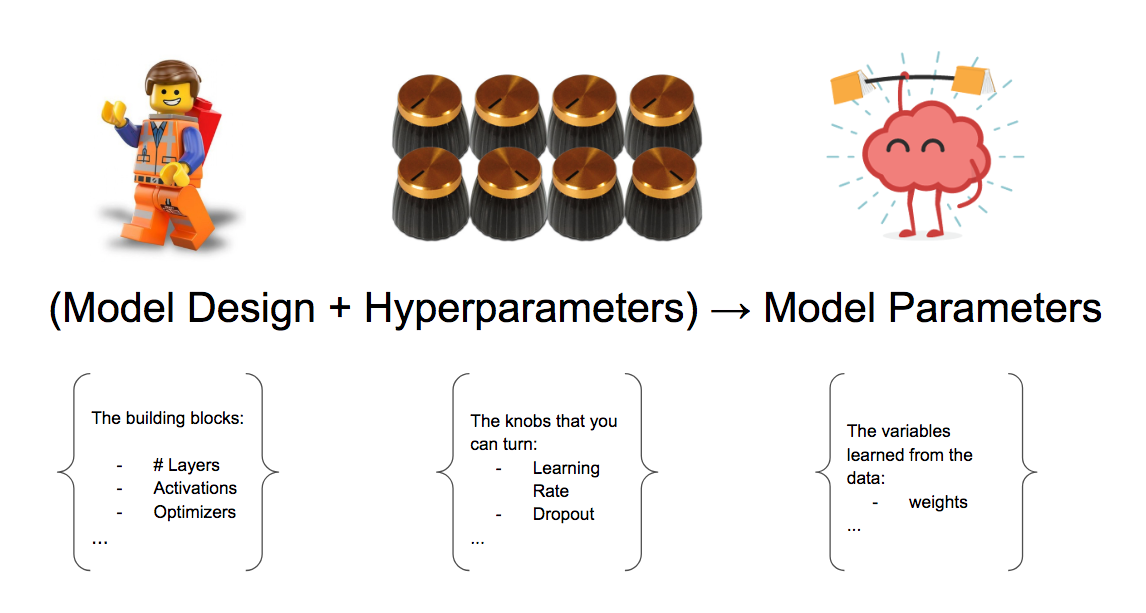

Hyperparameters are the knobs that you can turn when building your machine / deep learning model.

Or, alternatively:

Hyperparameters are all the training variables set manually with a pre-determined value before starting the training.

We can likely agree that the Learning Rate and the Dropout Rate are considered hyperparameters, but what about the model design variables? These include embeddings, number of layers, activation function, and so on. Should we consider these variables as hyperparameters?

For simplicity's sake, yes – we can also consider the model design components as part of the hyperparameters set.

Finally, how about the parameters obtained from the training process – the variables learned from the data? These weights are known as model parameters. We'll exclude them from our hyperparameter set.

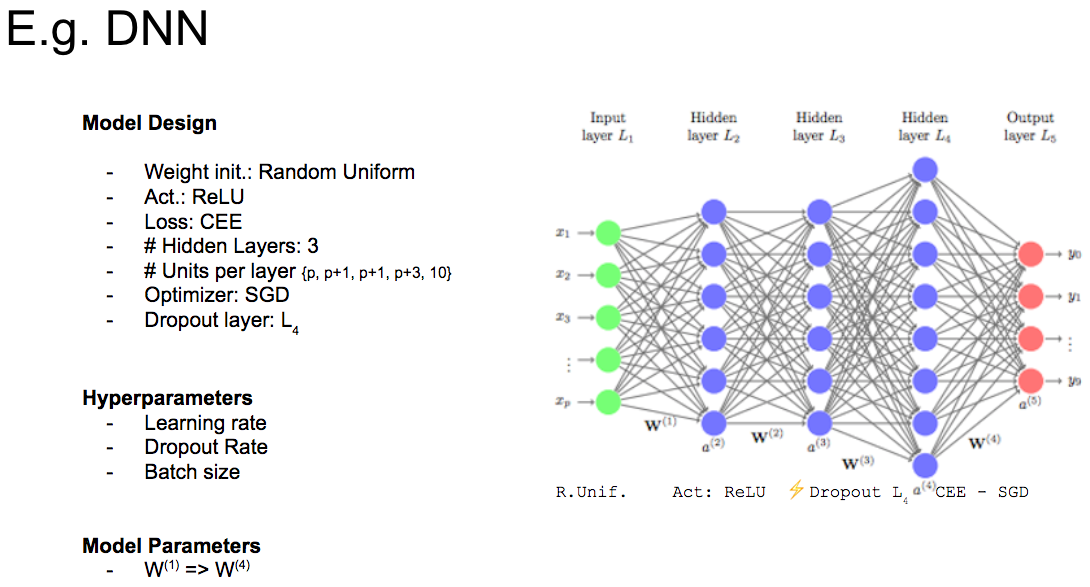

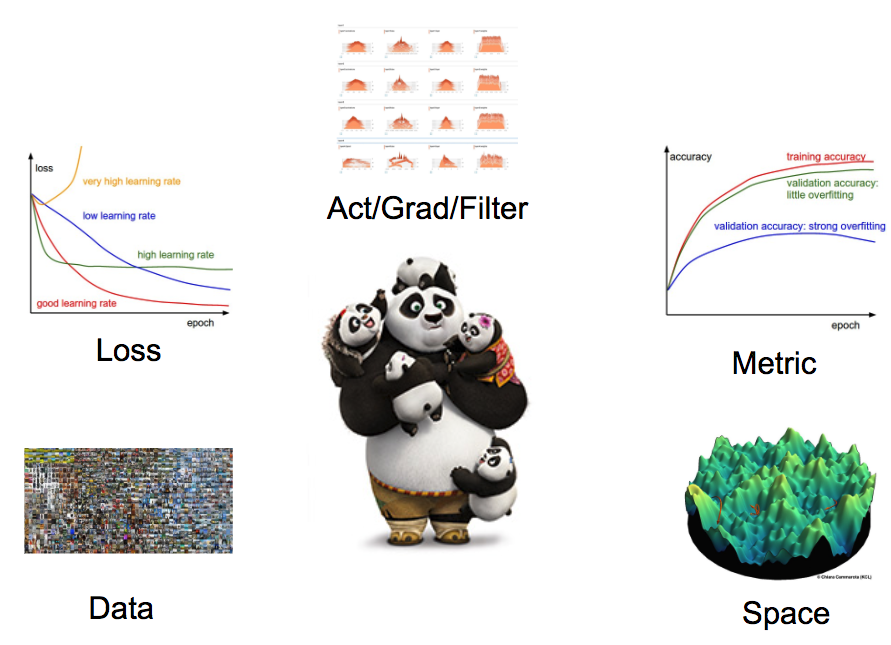

Okay, let's try a real-world example. Take a look at the picture below for an example illustrating the different classifications of variables in a deep learning model.

Our next problem: searching is expensive

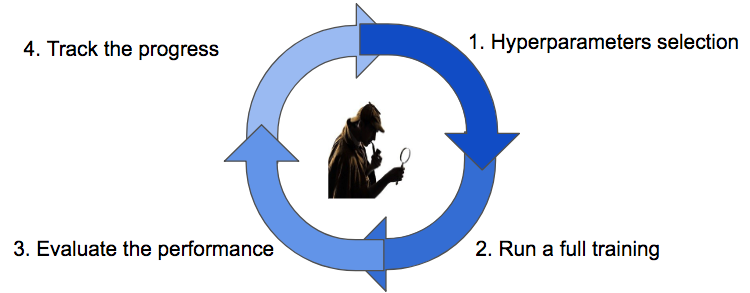

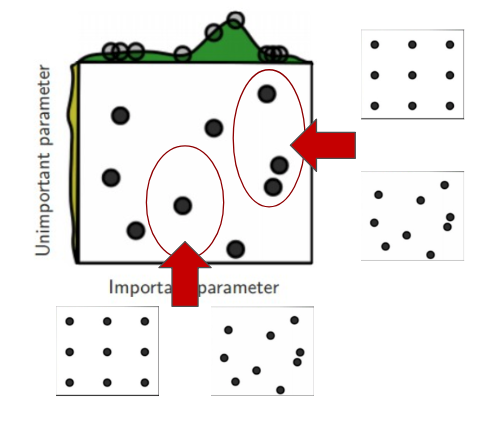

Now that we know we want to search for the best configuration of hyperparameters, we're faced with the challenge that searching for hyperparameters is an iterative process constrained by 💻, 💰 and ⏳.

Everything starts with a guess (step 1) of a promising configuration, then we will need to wait until a full training (step 2) to get the actual evaluation on the metric of interest (step 3). We'll track the progress of the searching process (step 4), and then according to our searching strategy, we'll select a new guess (step 1).

We'll keep going like this until we reach a terminating condition (such as running out of ⏳ or 💰).

Let's talk strategies

We have four main strategies available for searching for the best configuration.

- Babysitting (aka Trial & Error)

- Grid Search

- Random Search

- Bayesian Optimization

Babysitting

Babysitting is also known as Trial & Error or Grad Student Descent in the academic field. This approach is 100% manual and the most widely adopted by researchers, students, and hobbyists.

The end-to-end workflow is really quite simple: a student devises a new experiment that she follows through all the steps of the learning process (from data collection to feature map visualization), then will she iterates sequentially on the hyperparameters until she runs out time (usually due to a deadline) or motivation.

If you've enrolled in the deeplearning.ai course, then you're familiar with this approach - it is the Panda workflow described by Professor Andrew Ng.

This approach is very educational, but it doesn't scale inside a team or a company where the time of the data scientist is really valuable.

Thus, we arrive at the question:

“Is there a better way to invest my time?”

Surely, yes! We can optimize your time by defining an automatic strategy for hyperparameter searching!

Grid Search

Taken from the imperative command "Just try everything!" comes Grid Search – a naive approach of simply trying every possible configuration.

Here's the workflow:

- Define a grid on n dimensions, where each of these maps for an hyperparameter. e.g. n = (learning_rate, dropout_rate, batch_size)

- For each dimension, define the range of possible values: e.g. batch_size = [4, 8, 16, 32, 64, 128, 256]

- Search for all the possible configurations and wait for the results to establish the best one: e.g. C1 = (0.1, 0.3, 4) -> acc = 92%, C2 = (0.1, 0.35, 4) -> acc = 92.3%, etc...

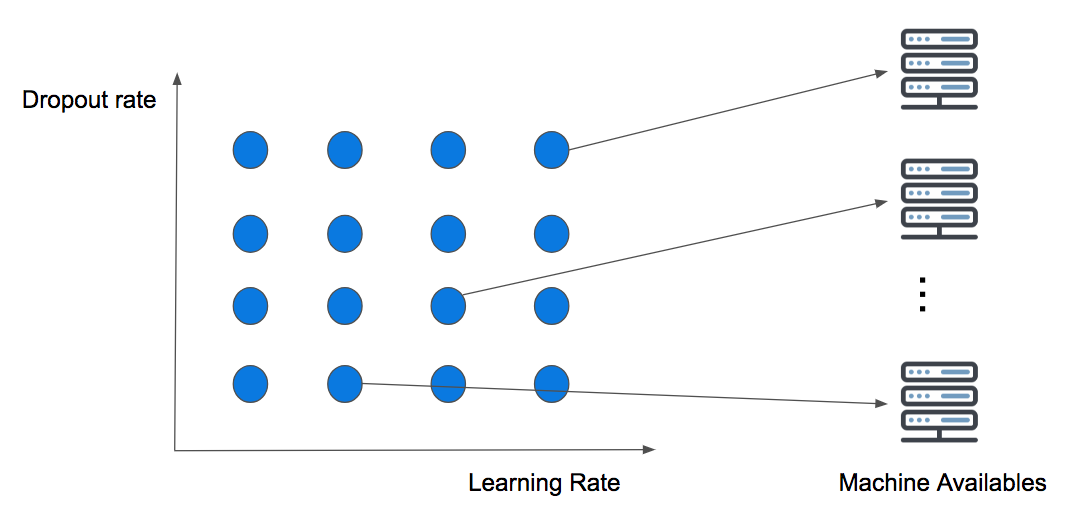

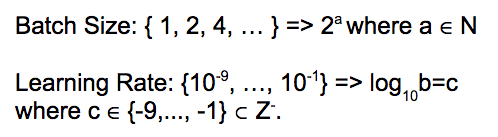

The image below illustrates a simple grid search on two dimensions for the Dropout and Learning rate.

This strategy is embarrassingly parallel because it doesn't take into account the computation history (we will expand this soon). But what it does mean is that the more computational resources 💻 you have available, then the more guesses you can try at the same time!

The real pain point of this approach is known as the curse of dimensionality. This means that more dimensions we add, the more the search will explode in time complexity (usually by an exponential factor), ultimately making this strategy unfeasible!

It's common to use this approach when the dimensions are less than or equal to 4. But, in practice, even if it guarantees to find the best configuration at the end, it's still not preferable. Instead, it's better to use Random Search — which we'll discuss next.

Try grid search now!

Click this button to open a Workspace on FloydHub. You can use the workspace to run the code below (Grid Search using Scikit-learn and Keras) on a fully configured cloud machine.

# Load the dataset

x, y = load_dataset()

# Create model for KerasClassifier

def create_model(hparams1=dvalue,

hparams2=dvalue,

...

hparamsn=dvalue):

# Model definition

...

model = KerasClassifier(build_fn=create_model)

# Define the range

hparams1 = [2, 4, ...]

hparams2 = ['elu', 'relu', ...]

...

hparamsn = [1, 2, 3, 4, ...]

# Prepare the Grid

param_grid = dict(hparams1=hparams1,

hparams2=hparams2,

...

hparamsn=hparamsn)

# GridSearch in action

grid = GridSearchCV(estimator=model,

param_grid=param_grid,

n_jobs=,

cv=,

verbose=)

grid_result = grid.fit(x, y)

# Show the results

print("Best: %f using %s" % (grid_result.best_score_, grid_result.best_params_))

means = grid_result.cv_results_['mean_test_score']

stds = grid_result.cv_results_['std_test_score']

params = grid_result.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

Random Search

A few years ago, Bergstra and Bengio published an amazing paper where they demonstrated the inefficiency of Grid Search.

The only real difference between Grid Search and Random Search is on the step 1 of the strategy cycle – Random Search picks the point randomly from the configuration space.

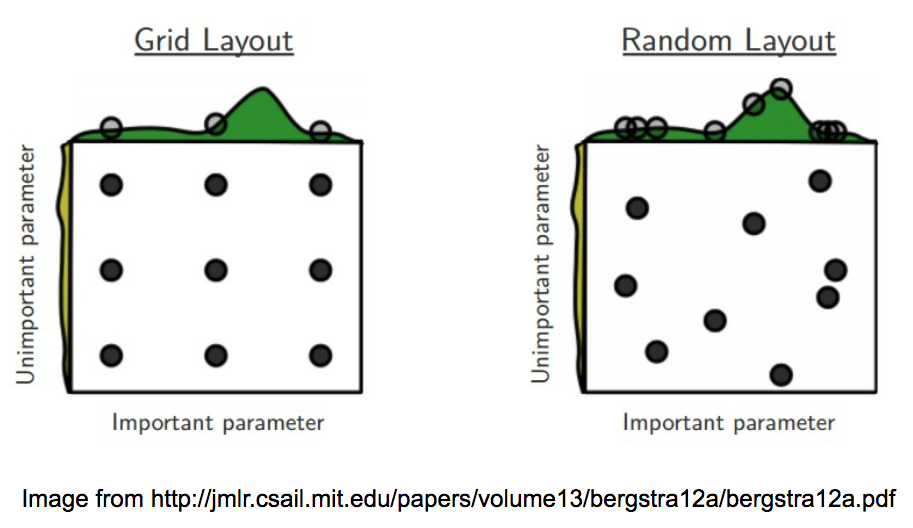

Let's use the image below (provided in the paper) to show the claims reported by the researchers.

The image compares the two approaches by searching the best configuration on two hyperparameters space. It also assumes that one parameter is more important that the other one. This is a safe assumption because Deep Learning models, as mentioned at the beginning, are really full of hyperparameters, and usually the researcher / scientist / student knows which ones affect the training most significantly.

In the Grid Layout, it's easy to notice that, even if we have trained 9 models, we have used only 3 values per variable! Whereas, with the Random Layout, it's extremely unlikely that we will select the same variables more than once. It ends up that, with the second approach, we will have trained 9 models using 9 different values for each variable.

As you can tell from the space exploration at the top of each layout in the image, we have explored the hyperparameters space more widely with Random Search (especially for the more important variables). This will help us to find the best configuration in fewer iterations.

In summary: Don't use Grid Search if your searching space contains more than 3 to 4 dimensions. Instead, use Random Search, which provides a really good baseline for each searching task.

Try Random Search now!

Click this button to open a Workspace on FloydHub. You can use the workspace to run the code below (Random Search using Scikit-learn and Keras.) on a fully configured cloud machine.

# Load the dataset

X, Y = load_dataset()

# Create model for KerasClassifier

def create_model(hparams1=dvalue,

hparams2=dvalue,

...

hparamsn=dvalue):

# Model definition

...

model = KerasClassifier(build_fn=create_model)

# Specify parameters and distributions to sample from

hparams1 = randint(1, 100)

hparams2 = ['elu', 'relu', ...]

...

hparamsn = uniform(0, 1)

# Prepare the Dict for the Search

param_dist = dict(hparams1=hparams1,

hparams2=hparams2,

...

hparamsn=hparamsn)

# Search in action!

n_iter_search = 16 # Number of parameter settings that are sampled.

random_search = RandomizedSearchCV(estimator=model,

param_distributions=param_dist,

n_iter=n_iter_search,

n_jobs=,

cv=,

verbose=)

random_search.fit(X, Y)

# Show the results

print("Best: %f using %s" % (random_search.best_score_, random_search.best_params_))

means = random_search.cv_results_['mean_test_score']

stds = random_search.cv_results_['std_test_score']

params = random_search.cv_results_['params']

for mean, stdev, param in zip(means, stds, params):

print("%f (%f) with: %r" % (mean, stdev, param))

One step back, two steps forward

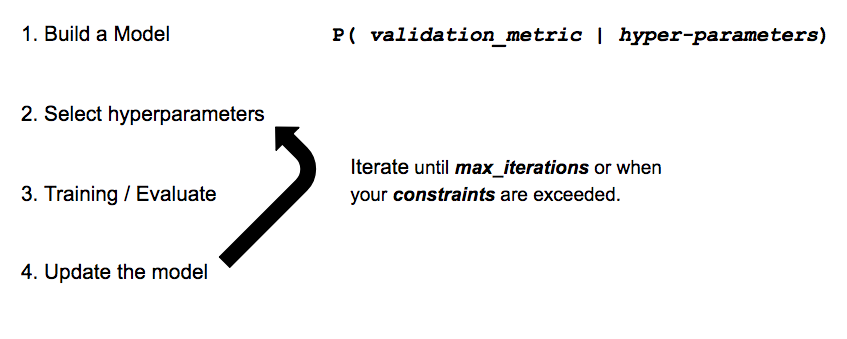

As an aside, when you need to set the space for each dimension, it's very important to use the right scale per each variables.

For example, it's common to use values of batch size as a power of 2 and sample the learning rate in the log scale.

It's also very common to start with one of the layouts above for a certain number of iterations, and then zoom into a promising subspace by sampling more densely in each variables range, and even starting a new search with the same or a different searching strategy.

Yet another problem: independent guesses!

Unfortunately, both Grid and Random Search share the common downside:

“Each new guess is independent from the previous run!”

It can sound strange and surprising, but what makes Babysitting effective – despite the amount of time required – is the ability of the scientist to drive the search and experimentation effectively by using the past as a resource to improve the next runs.

Wait a minute, this sounds familiar... what if we try to model the hyperparameter search as a machine learning task?!

Allow me to introduce Bayesian Optimization.

Bayesian Optimization

This search strategy builds a surrogate model that tries to predict the metrics we care about from the hyperparameters configuration.

At each new iteration, the surrogate we will become more and more confident about which new guess can lead to improvements. Just like the other search strategies, it shares the same termination condition.

If this sounds confusing right now, don't worry – it's time for another visual example.

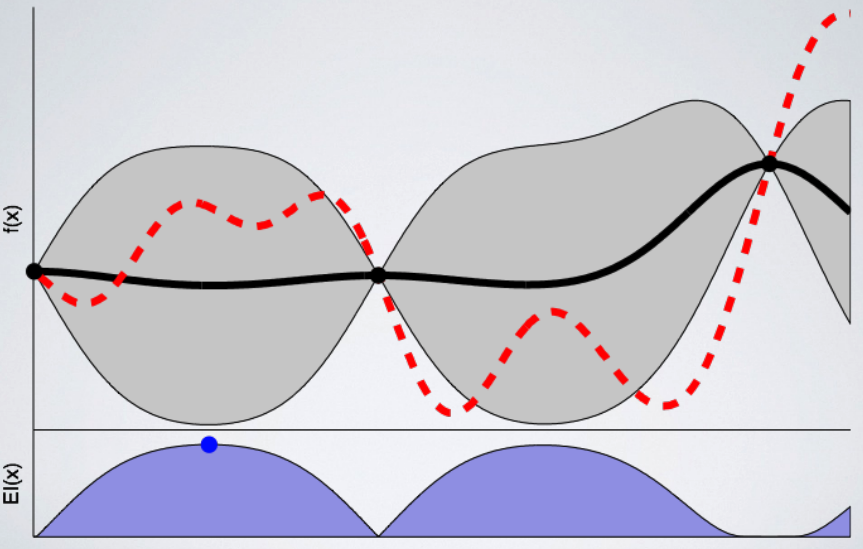

The Gaussian Process in action

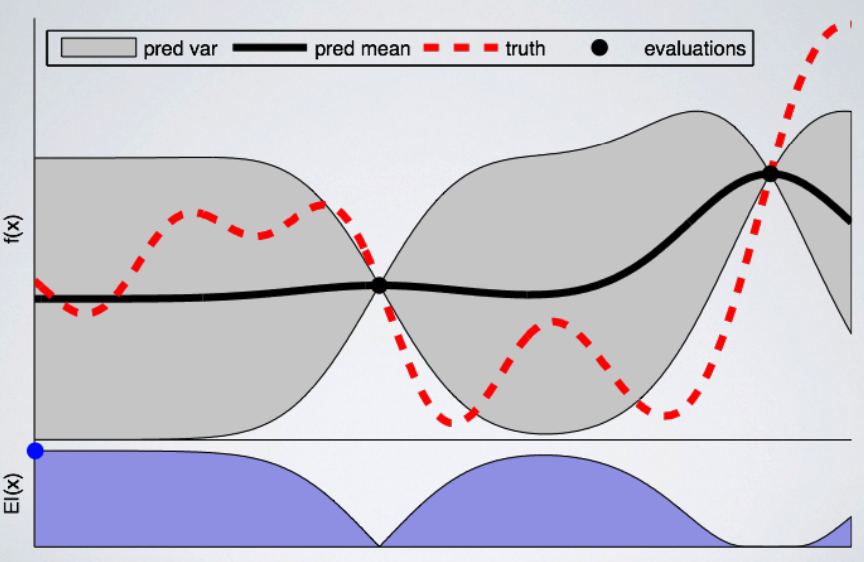

We can define the Gaussian Process as the surrogate that will learn the mapping from hyperparameters configuration to the metric of interest. It will not only produce the prediction as a value, but it will also give us the range of uncertainty (mean and variance).

Let's dive into the example provided by this great tutorial.

In the above image, we are following the first steps of a Gaussian Process optimization on a single variable (on the horizontal axes). In our imaginary example, this can represent the learning rate or dropout rate.

On the vertical axes, we are plotting the metrics of interest as a function of the single hyperparameter. Since we're looking for the lowest possible value, we can think of it as the loss function.

The black dots represent the model trained so far. The red line is the ground truth, or, in other words, the function that we are trying to learn. The black line represents the mean of the actual hypothesis we have for the ground truth function and the grey area shows the related uncertainty, or variance, in the space.

As we can notice, the uncertainty diminishes around the dots because we are quite confident about the results we can get around these points (since we've already trained the model here). The uncertainty, then, increases in the areas where we have less information.

Now that we've defined the starting point, we're ready to choose the next promising variables on which train a model. For doing this, we need to define an acquisition function which will tell us where to sample the next configuration.

In this example, we are using the Expected Improvement: a function that is aiming to find the lowest possible value if we will use the proposed configuration from the uncertainty area. The blue dot in the Expected Improvement chart above shows the point selected for the next training.

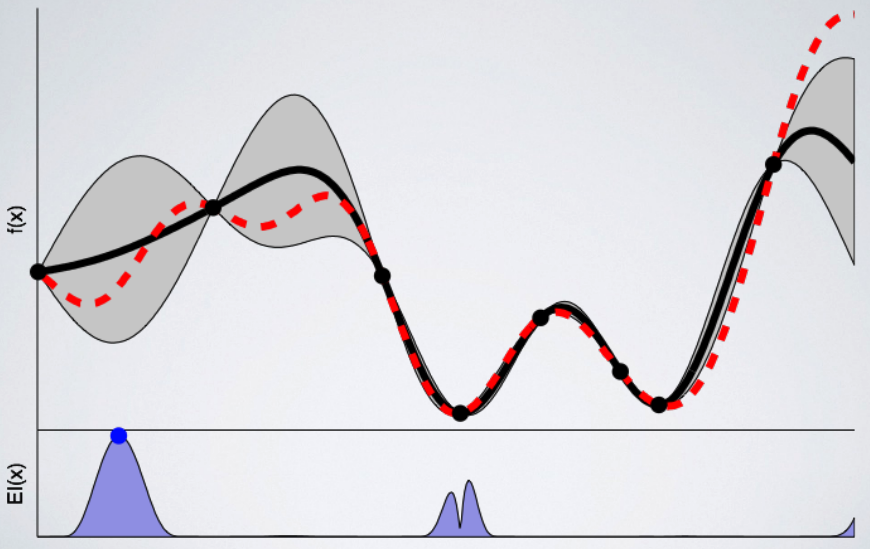

The more models we train, the more confident the surrogate will become about the next promising points to sample. Here's the chart after 8 trained models:

The Gaussian Process falls under the class of algorithms called Sequential Model Based Optimization (SMBO). As we've just seen, these algorithms provide a really good baseline to start the search for the best hyperparameter configuration. But, just like every tool, they come with their downsides:

- By definition, the process is sequential

- It can only handle numeric parameters

- It doesn't provide any mechanism to stop the training if it's performing poorly

Please note that we've really just scratched the surface about this fascinating topic, and if you're interested in a more detailed reading and how to extend SMBO, then take a look at this paper.

Try Bayesian Optimization now!

Click this button to open a Workspace on FloydHub. You can use the workspace to run the code below (Bayesian Optimization (SMBO - TPE) using Hyperas) on a fully configured cloud machine.

def data():

"""

Data providing function:

This function is separated from model() so that hyperopt

won't reload data for each evaluation run.

"""

# Load / Cleaning / Preprocessing

...

return x_train, y_train, x_test, y_test

def model(x_train, y_train, x_test, y_test):

"""

Model providing function:

Create Keras model with double curly brackets dropped-in as needed.

Return value has to be a valid python dictionary with two customary keys:

- loss: Specify a numeric evaluation metric to be minimized

- status: Just use STATUS_OK and see hyperopt documentation if not feasible

The last one is optional, though recommended, namely:

- model: specify the model just created so that we can later use it again.

"""

# Model definition / hyperparameters space definition / fit / eval

return {'loss': <metrics_to_minimize>, 'status': STATUS_OK, 'model': model}

# SMBO - TPE in action

best_run, best_model = optim.minimize(model=model,

data=data,

algo=tpe.suggest,

max_evals=,

trials=Trials())

# Show the results

x_train, y_train, x_test, y_test = data()

print("Evalutation of best performing model:")

print(best_model.evaluate(x_test, y_test))

print("Best performing model chosen hyper-parameters:")

print(best_run)

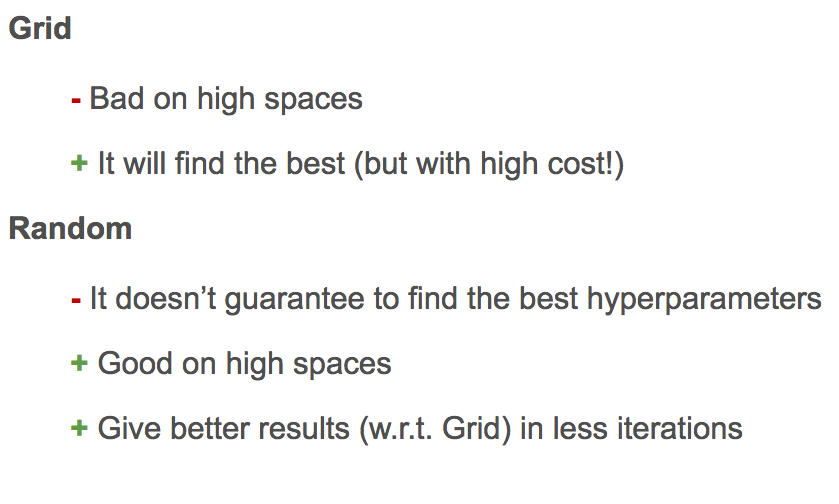

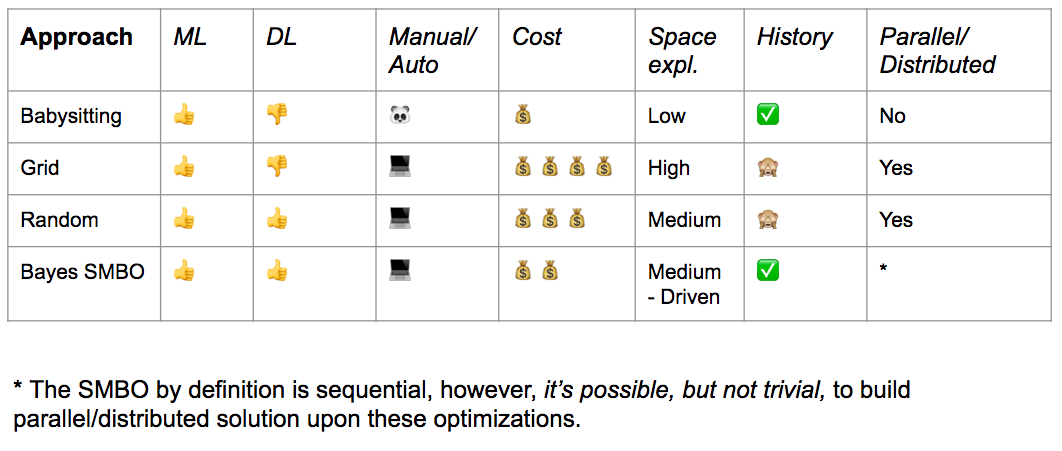

Search strategy comparison

It's finally time to summarize what we've covered so far to understand the strengths and weakness of each proposal.

Bayes SMBO is probably the best candidate as long as resources are not a constraint for you or your team, but you should also consider establishing a baseline with Random Search.

On the other hand, if you're still learning or in the development phase, then babysitting – even if unpractical in term of space exploration – is the way to go.

Just like I mentioned in the SMBO section, none of these strategies provide a mechanism to save our resources if a training is performing poorly or even worse diverging – we'll have to wait until the end of the computation.

Thus, we arrive at the last question of our fantastic quest:

“Can we optimize the training time?”

Let's find out.

The power of stopping earlier

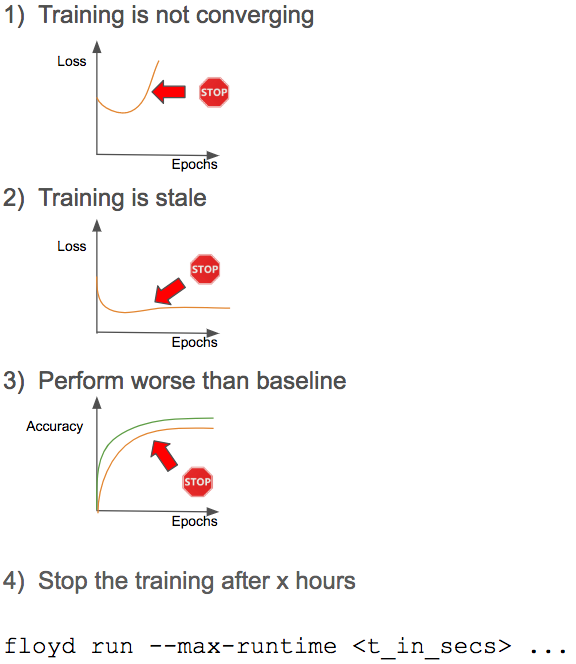

Early Stopping is not only a famous regularization technique, but it also provides a great mechanism for preventing a waste of resources when the training is not going in the right direction.

Here's a diagram of the most adopted stopping criteria:

The first three criteria are self-explanatory, so let's focus our attention to the last one.

It's common to cap the training time according to the class of experiment inside the research lab. This policy acts as a funnel for the experiments and optimizes for the resources inside the team. In this way, we will be able to allocate more resources only to the most promising experiments.

The floyd-cli (the software used by our users to communicate with FloydHub and that we've open-sourced on Github) provides a flag with this purpose: our power users are using it massively to regulate their experiments.

These criteria can be applied manually when babysitting the learning process, or you can do even better by integrated these rules in your experiment through the hooks/callbacks provided in the most common frameworks:

- Keras provides a great EarlyStopping function and even better a suite of super useful callbacks. Since Keras has been recently integrated inside TensorFlow, you will be able to use the callbacks inside your TensorFlow code.

- TensorFlow provides the Training Hooks, these are probably not intuitive as Keras callbacks (or the tf.keras API), but they provides you more control over the state of the execution. TensorFlow 2.0 (currently in beta) introduces a new API for managing hyperparameters optimization, you can find more info in the official TensorFlow docs.

- There is even more in the TensorFlow/Keras realm! The Keras team has just released an hyperparameter tuner for Keras, specifically for

tf.keraswith TensorFlow 2.0.

Keras Tuner is now out of beta! v1 is out on PyPI.https://t.co/riqnIr4auA

— François Chollet (@fchollet) October 31, 2019

Fully-featured, scalable, easy-to-use hyperparameter tuning for Keras & beyond. pic.twitter.com/zUDISXPdBw

- At this time, PyTorch hasn't yet provided a hooks or callbacks component, but you can check the TorchSample repo and in the amazing Forum.

- The fast.ai library provides callbacks too, you can find more info in the official fastai callbacks doc page. If you are lost or need some help, I strongly recommend you to reach the amazing fast.ai community.

- Ignite (high-level library of PyTorch) provides callbacks similarly to Keras. The library is actually under active development but it certainly seems a really interesting option.

I will stop the list here to limit the discussion to the most used / trending frameworks (I hope to not have hurt the sensibility of the other frameworks' authors. If so, you can direct your complaints to me and I'll be happy to update the content!)

This is not the end.

There is a subfield of machine learning called “AutoML” (Automatic Machine Learning) which aims to automate methods for model selection, features extraction and / or hyperparameters optimization.

This tool is the answer to the last question (I promise!):

“Can we learn the whole process?”

You can think of AutoML as Machine Learning task which is solving another Machine Learning task, similar to what we've done with the Baeysian Optimiziation. Essentially, this is Meta-Machine Learning.

Research: AutoML and PBT

You have most likely heard of Google's AutoML which is their re-branding for Neural Architecture Search. Remember, all the way at the beginning of the article, we decided to merge the model design component into the hyperparameters variables? Well, Neural Architecture Search is the subfield of AutoML which aims to find the best models for a given task. A full discussion on this topic would require a series of articles. Luckily, Dr. Rachel Thomas at fast.ai did an amazing job that we are happy to link!

I would like to share with you another interesting research effort from DeepMind where they used a variant of Evolution Strategy algorithm to perform hyperparameters search called Population Based Training (PTB is also at the foundation of another amazing research from DeepMind which wasn't quite covered from the press but that I strongly encourage you to check out on your own). Quoting DeepMind:

PBT - like random search - starts by training many neural networks in parallel with random hyperparameters. But instead of the networks training independently, it uses information from the rest of the population to refine the hyperparameters and direct computational resources to models which show promise. This takes its inspiration from genetic algorithms where each member of the population, known as a worker, can exploit information from the remainder of the population. For example, a worker might copy the model parameters from a better performing worker. It can also explore new hyperparameters by changing the current values randomly.

Of course, there are probably tons of other super interesting researches in this area. I've just shared with you the ones who gained some recent prominence in the news.

Managing your experiments on FloydHub

One of the biggest features of FloydHub is the ability to compare different model you're training when using a different set of hyperparameters.

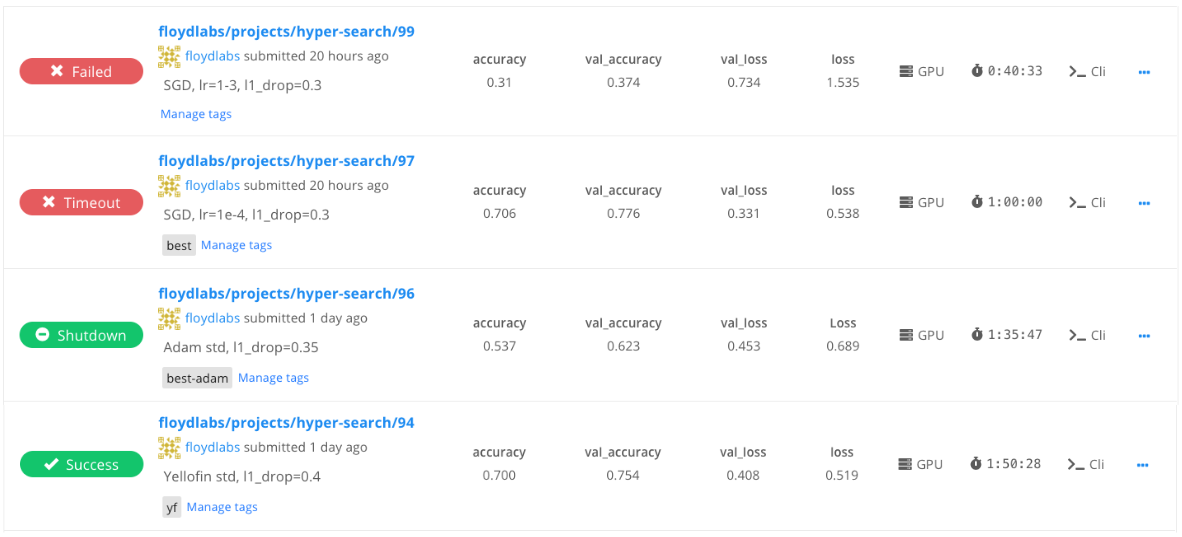

The picture below shows a list of jobs in a FloydHub project. You can see that this user is using the job's message field (e.g. floyd run --message "SGD, lr=1e-3, l1_drop=0.3" ... ) to highlight the hyperparameters used on each of these jobs.

Additionally, you can also see the training metrics for each job. These offer a quick glance to help you understand which of these jobs performed best, as well as the type of machine used and the total training time.

The FloydHub dashboard gives you an easy way to compare all the training you've done in your hyperparameter optimization – and it updates in real-time.

Our advice is to create a different FloydHub project for each of the tasks/problems you have to solve. In this way, it's easier for you to organize your work and collaborate with your team.

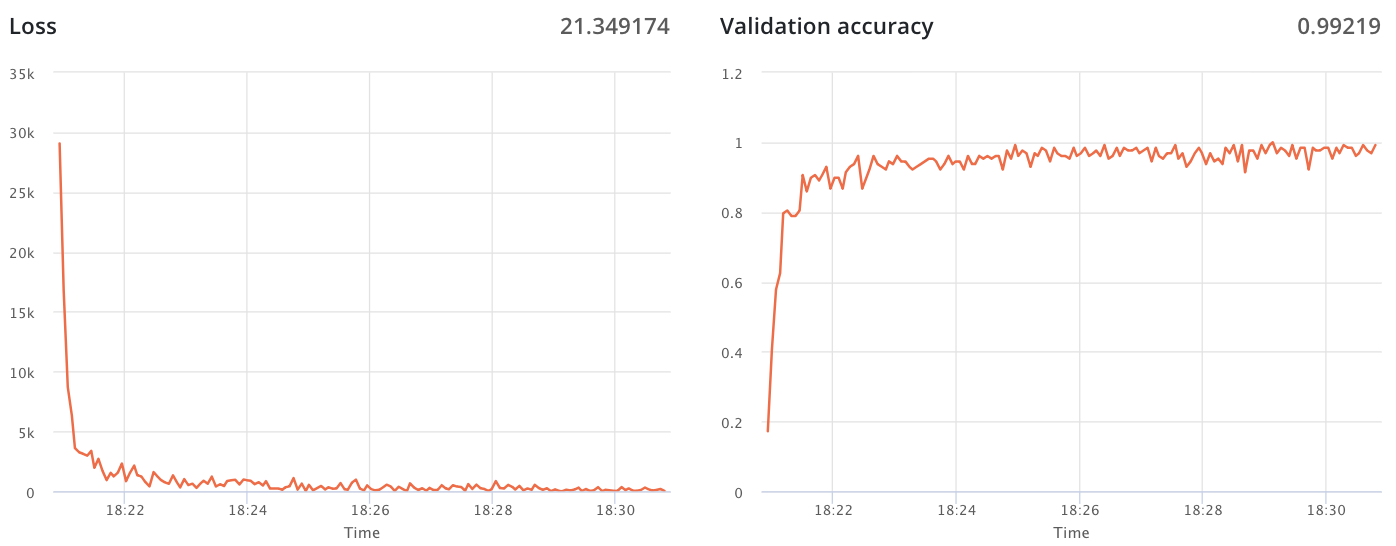

Training metrics

As mentioned above, you can easily emit training metrics with your jobs on FloydHub. When you view your job on the FloydHub dashboard, you'll find real-time charts for each of the metrics you've defined.

This feature is not intended to substitute Tensorboard (we provides this feature as well), but instead aims to highlight the behavior of your training given the configuration of hyperparameters you've selected.

For example, if you're babysitting the training process, then the training metrics will certainly help you to determine and apply the stopping criteria.

FloydHub HyperSearch (Coming soon!)

We are currently planning to release some examples of how to wrap the floyd-cli command line tool with these proposed strategies to effectively run hyperparameters search on FloydHub. So, stay tuned!

One last thing!

Some of the FloydHub users have asked for a simplified hyperparameter search solution (similar to the solution proposed in the Google Vizier paper) inside FloydHub. If you think this would be useful to you, please let us know by contacting our support or by posting on the Forum.

We're really excited to improve FloydHub to meet all your training needs!

Do you model for living? 👩💻 🤖 Be part of a ML/DL user research study and get a cool AI t-shirt every month 💥

We are looking for full-time data scientists for a ML/DL user study. You'll be participating in a calibrated user research experiment for 45 minutes. The study will be done over a video call. We've got plenty of funny tees that you can show-off to your teammates. We'll ship you a different one every month for a year!

Click here to learn more.

FloydHub Call for AI writers

Want to write amazing articles like Alessio and play your role in the long road to Artificial General Intelligence? We are looking for passionate writers, to build the world's best blog for practical applications of groundbreaking A.I. techniques. FloydHub has a large reach within the AI community and with your help, we can inspire the next wave of AI. Apply now and join the crew!