Generating Classical Music with Neural Networks

Christine McLeavey Payne may have finally cured songwriter's block. Her recent project Clara is a long short-term memory (LSTM) neural network that composes piano and chamber music. Just give Clara a taste of your magnum-opus-in-progress, and Clara will figure out what you should play next.

Christine McLeavey Payne may have finally cured songwriter's block. Her recent project Clara is a long short-term memory (LSTM) neural network that composes piano and chamber music. Just give Clara a taste of your magnum-opus-in-progress, and Clara will figure out what you should play next.

Take a listen to this jazz piano selection generated by Clara:

Want more? Clara can also write duets – here's a violin and piano composition:

Christine is a Fellow on the Technical Staff at OpenAI, a non-profit AI research company focused on discovering and enacting the path to safe artificial general intelligence. At OpenAI, Christine focuses on Multiagent Learning, studying how agents learn very advanced behaviors by competing and cooperating with other agents, rather than what they could learn on their own in an environment.

Christine's also an accomplished classical pianist – she graduated from The Juilliard School and is the founding pianist of Ensemble San Francisco. She also has a Master’s Degree in Neuroscience from Stanford University. Oh, and she has two young kids!

She's pretty much the coolest person in the universe.

As you might expect, I'm thrilled to share my conversation with Christine for this Humans of Machine Learning (#humansofml) interview. In this post, we're going to dig into the neural network behind Clara, as well as Christine's own journey with AI and deep learning.

Okay, let's dive in. How exactly does Clara work?

Clara is based on the idea that you can generate music in the same way that you generate language.

When we train a language model, we feed the neural network a paragraph and then ask it to predict the next word (“to be or not to ___”). Once you have a model that is good at predicting the next word, you can easily turn that into a generator. As it predicts a next word, you then feed that word back into the model and ask it to predict the next word after that, and so forth.

Of course, part of the difficulty is figuring out how to translate words (or notes) into vectors that you can pass directly into the model. A lot of my work was finding a good encoding for the music!

Why did you use an long short-term memory (LSTM) architecture for Clara?

Jeremy Howard and Sebastien Ruder achieved great results using an LSTM for language model. More recently, there is a shift towards using a Transformer architecture, and right now I’m experimenting with that as well.

Let’s talk more about the data and the data encoding process for Clara. Where did you get the music for the dataset?

I used midi files from Classical Archives, a collection of files that many different musicians have submitted. I found this a particularly useful collection, partly because it is so large, and partly because pieces are separated by composer. Unlike some other classical music datasets, I could easily control which pieces were included, and I could ensure that I didn’t have multiple copies of the same piece performed by different pianists (I didn’t want to contaminate things by having a single piece show up in both my training set and my validation set).

Similarly, I collected jazz pieces from different sources, including this library. I used Beautiful Soup to scrape web pages for all the midi files.

You decided to encode these midi files into a text format. Can you tell us more about that process?

This is a two part process. I first use MIT’s music21 library to translate the midi files into a music21 stream. This provides me with the notes and their timing offsets (now with respect to the tempo of the piece).

I then translated those notes and timings into either my chordwise or notewise encodings. I’ll explain those terms in just a moment.

First, let me explain a few extra tricks around encoding the timing of the rests.

Wait, what’s a rest?

A rest just means silence. You can have rests of different lengths that tell you how long to wait before playing the next note.

In the chordwise version, I use the term “rest” more generally. Basically, I mean any time step where you don’t play any new notes. The trouble is, if the time steps are frequent (say 4 times, or even 12 times per quarter note), most of the time you’re not playing any new notes. Approximately 40% of the time, you have this “do nothing” indication, and if you naively trained a model on this, the neural net would just learn to predict rest all the time, since at least then it would be correct 40% of the time.

Therefore, I developed a trick where I re-encode the rests. Instead of predicting a single rest token, I instead count the number of total notes in the previous 10 timesteps. This has two functions: first, there’s no way for the model to fall into the easy local minimum of always predicting “rest”. Second, models often train to higher accuracies if you give them an auxiliary but related task. In order to do well on this task, the model has to understand something about the structure of the music.

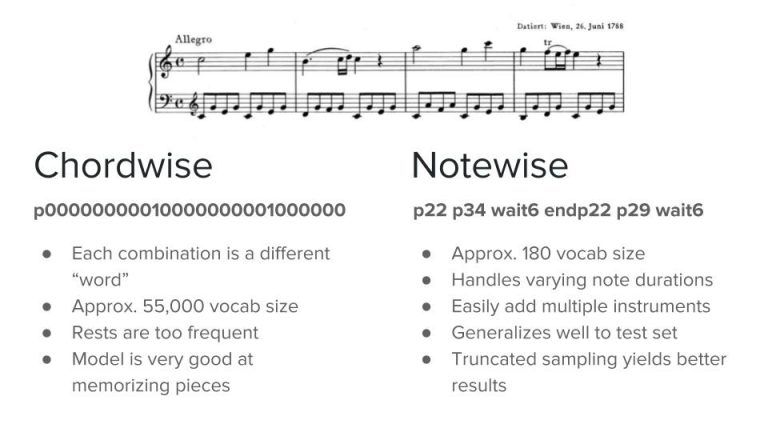

Can you explain these terms chordwise and notewise? Even more generally, what’s a chord versus a note?

Here I’m using “chord” in a very general sense - I mean any combination of notes played at one time. Often this might be a traditional musical chord (for example C major would be C, E, and G played together), but it can be any arbitrary combination. In theory, this could be 2^88 possible different combinations, but in practice music is much more predictable, and I found around 60,000 different chords in the data set.

A chordwise file looks like this:

p00000010000000000100000000 p0000000100000000000000 p00000000000100000000000I’ve shown it here for a small note range (this would be three chords in a row, and the p is for piano). There is a 1 for any note that is played at that time step, and then a 0 otherwise. Next, I take the set of all possible chords, and that is my vocabulary.

A notewise file looks like this

p20 p32 wait3 endp20 p25 endp32 wait12With notewise encodings, I indicate:

- the starts of notes (

p20is the piano playing pitch 20) - the ends of notes (this way you could hold a note for an arbitrarily long time),

- and a wait signal that indicates the end of one musical time step, as well as how long to wait before the next change.

Why did you take two approaches for the encoding of the midi files? Did you try chordwise first, and then move towards notewise based on the model results?

Yes, I’ve been frustrated with the chordwise results. It tends to overfit to the training set (for example, if I prompt it with the start of a Mozart sonata, it then continues generating the whole rest of that sonata). You can listen to a chordwise example here, which fully reproduces the Mozart sonata:

The chordwise encoding didn’t generalize as well, and it also failed on prompts from the validation set.

The notewise version, on the other hand, has a harder time with long term structure, but it is much more flexible, and it performs well even when it moves into territory far from what it trained on. Here is a sample notewise generation from Clara:

I thought your data augmentation strategy was very clever -- transposing the midi files into all possible keys. Please tell me you automated that in some way!

Yes, of course! It’s actually very easy, I can simply add or subtract an offset to every single pitch. I have to admit I was tempted to spend a day “augmenting” the data set by sitting at a piano keyboard and recording myself playing for a few hours.

For your sampling, why did you use only 62 notes instead of the full 88-keys on a traditional piano?

This is a great question. Originally, I cut the range because I was worried about my vocab size being too large. Although in practice, pianists almost never use notes outside that range (in my entire data set, it meant cutting out 3 notes). It should all work with the full 88 keys as well, although those extra notes are so rare in the training data that the model will never learn to predict any of them.

Moving to Clara's music generation, it appears that you started using arbitrary choices among the top best guesses instead of the very top best guess. Where did this insight come from?

Sampling the generation well is extremely important. My idea was that in music there are often multiple options for next notes or chords that would all sound good.

So, rather than only take the model’s top prediction (which might fall into short repetitive loops), it is interesting to sometimes sample 2nd and 3rd guesses.

I think this would be an interesting topic for future work. Ideally, you might set up a beam search. At each generation step, keep your top 3 notes, then feed all of those into the model and predict 3 notes for each of those. Now, of the 9 generations, keep only the 3 best and feed those into the model, and so forth.

In music, we have a very common structure: play a short phrase once, repeat it exactly once, and then on the third time, start to repeat, but then continue on to a longer thought. I’d love to find a way to get a neural net to generate this.

Oh, I forgot to ask -- why the name Clara?

This is in honor of Clara Schumann. She was an incredible pianist and composer, living in Germany in the mid-1800’s. Here’s a gorgeous piano romance she composed, and she was considered one of the leading concert pianists of her day. She was also married to Robert Schumann, very close friends with Johannes Brahms, and mom to 8 kids.

Is your project publicly available for people to reproduce?

Yes! The project is available here: https://github.com/mcleavey/musical-neural-net

What role do you see for AI in music? When will composers start using tools like Clara? Sorry, that was two questions.

At the moment, I think the best use for a tool like Clara would be in partnership with a human composer. Clara is very good at coming up with new patterns and ideas, but then as I listen to a generation, I notice points where I’d then want to break away from Clara’s idea. As mentioned, I’m not a composer, but Clara was making me want to try again, so I can only imagine that a great composer could take this in very interesting directions.

On a more practical note, I can imagine AI music generation being used for film scores. It would be really interesting to condition the music generations on the emotion or timing of the scene. Plus, we’re much more forgiving about a film score not having a large scale plan - we usually just want music that adds to the mood, but doesn’t draw our attention to itself.

I’m imagining an app that lets you play a few notes on a piano, and then Clara will suggest the next few notes to play. Is that possible with Clara? It could be a songwriter’s dream. The end of songwriter’s block!

That’s a great idea! It should definitely be possible if you played on a keyboard that can record a midi file. There’s a midi-to-encoding file in the project that could take that and turn it into a prompt for Clara.

Who are your own favorite composers?

That’s a tough question, there are too many! I always come back to Brahms and Rachmaninoff on the classical side. Rachmaninoff’s cello and piano sonata is probably my all-time favorite piece both to play and to listen to (especially the third movement!). I’m also obsessed with Ella Fitzgerald, and I love so many of the songs she sang.

Have you written any music yourself?

Sadly, no. When I was 5, I composed a piece and I was very excited about it, and then a few days later realized it was actually something I’d heard Sesame Street. That was probably the peak of my composing career.

As someone who used to make games and now works at OpenAI, have you tried your hand at Dota 2 yet?

No, I really need to try it. We have a games night occasionally at OpenAI where people hang out and play Dota. I’ve picked up a bit from watching the matches, but I still feel like they’re speaking a foreign language, it’s such an involved game! It’s exciting to be around the project though.

What are you hoping to learn or tackle next in your career?

My main goal is to build things that help people. It’s why I’ve been drawn to creating educational games in the past, and why I love the OpenAI mission statement.

Any advice for people who want to get started with deep learning?

I wrote a blog post about my favorite resources for getting started. My main advice is just to dive in. It’s easy to feel overwhelmed and to think you don’t have the right background, but by now there are some great online courses. I’d recommend starting at the level of fastai or Keras, and then dive into the details of PyTorch or TensorFlow afterwards if you find yourself pushing the boundaries.

In a recent fast.ai lecture, Jeremy Howard mentioned his advice to you to “Pick one project and make it fantastic.” How did you interpret this advice?

I’m very guilty of this one - I tend to have a million ideas, and I start lots of projects. I took this advice from Jeremy to mean: have the discipline to focus on one project and really follow through with it. Work through the boring details, try to make the code well organized and commented. Also, remember that if you’re applying for a job, first impressions do matter. It’s worth the effort of making a website or other display for it that will catch people’s attention.

You’ve written about the difference between active learning and passive learning. Can you elaborate a bit more on that?

I think there are two different levels of learning a new area as a programmer. There’s the passive level where you can follow code that’s already written, and then there’s the active level where you can write that from scratch, or where you can make changes to it and experiment with that. In the same way, I feel I only really know a topic when I can take what I’ve learned and then teach it to someone else. It’s absolutely key to get to that second level of understanding and fluency.

Finally, is it too late for me to learn how to play piano?

You should go for it! The only thing I’d say is to be patient and to enjoy learning. I often think the great advantage kids have over adults is that they’re fine with being bad at something for a long time. It takes kids 5-10 years to get good at piano, but they don’t worry that they sound awful along the way. It’s all about loving that feeling of starting something totally new.

This was great! I’m going to get that keyboard soon. Thank you, Christine, for chatting with me. Where can people go to learn more about you and your work?

Thanks, these were great questions! You can visit my website or I’m also active on Twitter.

A couple of months after this interview Christine published MuseNet, where she used GPT-2, a transformer base model, to generate music with amazing results. 🎉

Introducing MuseNet, a neural network which discovered how to generate music using many different instruments and styles.

— OpenAI (@OpenAI) April 25, 2019

Listen & interact: https://t.co/yudNpaerz9

MuseNet will play an experimental concert today from 12–3pmPT on livestream: https://t.co/CRx0goxVrh pic.twitter.com/7tPMEd4tpa

We also recommend to watch this small video interview with Andrew Ng.

Christine finished the Deep Learning Specialization a year ago. Now she's a full-time OpenAI research scientist building neural networks that create original music. Christine and Andrew chat about the tech behind her latest project, MuseNet, and her advice for those wanting to start their own careers in ML.